March 10, 2025

Choosing the right file format for photography is critical if you want high quality, but it’s also very confusing. There are a number of file formats, file details like color space and bit depth, hidden details, HDR considerations, and an endless number considerations for the software or hardware others might use to view your images. In this tutorial, you’ll learn the best options to ensure your work looks great and is easy to share.

This topic is so deep that no one person really understands everything under all possible scenarios, so it probably helps to understand my background and how I’ve reached the conclusions below. I’ve been working with digital photography, digital printing, the web, and Adobe software for over 25 years. I teach photography to a wide range of people using a large variety of software, hardware, and web services to share their work. And as a software developer, I have spent years testing and optimizing image exports for the web, including encoding custom gain maps to optimize images for the new HDR display technology. I’ve processed and shared thousands of images, learned from countless mistakes, and received feedback from a large number of photographers who care deeply about the quality of their work. But I have not done rigorous, scientific study of all these topics (that would be a full time job on its own).

The discussion below will cover many critical details, but let’s start with a high-level overview of the most important information.

TLDR: Shoot RAW, edit PSB in a wide gamut, and share on the web as JPG (with a gain map if HDR). In about a year, AVIF should replace your use of JPG.

I recommend photographers use the following file formats and settings:

- Capture RAW images with the highest bit depth available.

- (exception: if you do not intended to process your images, capturing in a lower quality format like JPG or HEIF may be ideal to support faster workflows)

- Save your layered working files as PSB

- Use 16 or 32-bit (never 8-bit)

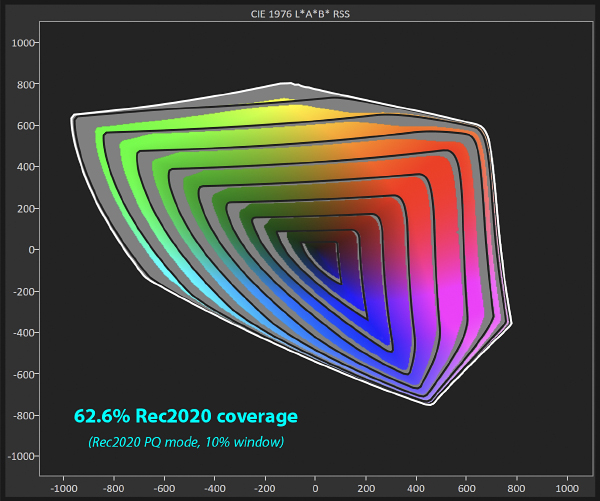

- Use a wide gamut colorspace (Rec2020 is ideal, but P3, Adobe RGB, and ProPhoto RGB are all great)

- TIF or PSD are fine and may be better supported outside Photoshop/Lightroom, but PSB is preferable because the file size is effectively unlimited, which is increasingly important with modern cameras and non-destructive workflows.

- JXL is a great format for sharing directly with other professionals (assuming they use supporting software, such as LR / ACR).

- JXL would be an ideal way to send images to your lab for print if they support it (higher quality than JPG, much smaller file size than TIF, and no size limit like AVIF).

- Sharing on the web:

- Sharing your standard (SDR) images on the web today depends on the service you use:

- JPG with sRGB is safe to use anywhere

- JPG with P3 is ideal, if you check to confirm that the color profile will not be stripped when your image is uploaded (which would cause your image to look desaturated)

- Share HDR images on the web using JPG with a gain map

- HDR images lacking a gain map will render inconsistently and often at very low quality

- JPG with a gain map is currently the only HDR format which is 100% safe on all browsers

- In the near future, AVIF will be an even better option than JPG

- AVIF offers higher quality and smaller sizes than JPG, as well as support for even wider gamuts (Rec2020).

- AVIF should be a good option by early 2026 (SDR AVIF is supported by all modern browsers, and HDR with or without ISO gain map is supported in the latest version of nearly all major browsers).

- File formats you should not share on the web at this time:

- Do not share any HDR image which does not include a gain map, this will result in significant loss of quality that you won’t notice (but it will affect many people with displays less capable than yours). See “Great HDR requires a gain map” for more info. I have seen numerous people make this mistake trying to share AVIF or JXL to Instagram – you should avoid those formats until there is proper support for uploading them with gain maps (you can upload them now on some devices, but your gain map will be discarded and the image will degrade significantly).

- JXL is only supported by 14% of web browsers (even if Chrome and FireFox added support it would take years for adoption rates to get into the high 90s). This is unfortunate because as great as AVIF is, JXL has some advantages including: lossless re-compression of JPG images on existing websites, faster encoding speed, and better support for progressive rendering over slow internet connections.

- HEIF (aka HEIC) is only supported by 14% of browsers, and this is not likely to grow significantly.

- I would skip webp. AVIF has nearly as much support (94% vs 97%), but offers higher image quality (10-bit), support for HDR (cannot support gain maps), and smaller file sizes. The primary benefit of webP over AVIF is that it is faster to encode, so it may appeal to some large scale content providers.

- You arguably skip PNG now. AVIF has nearly as much support (94% vs 97%) for transparency and nearly lossless quality with much smaller file sizes. However, PNG offers true lossless and has slightly browser higher support today.

- The best format for sharing on your own personal devices is generally the same as what you’d use on the web

- Using the same format ensures you can easily share from your phone/tablet

- For HDR, iOS and Android have great support for JPG gain maps (you should not share AVIF / JXL yet and they generally will not adapt optimally when headroom is limited, but they would use less space on your device).

Before we get into the specifics of the various file formats, let’s dive into a few technical details which apply across the various formats.

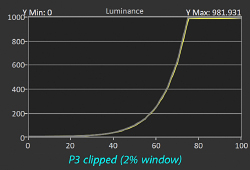

Bit depth

Image data gets stored as bits (1s and 0s). Bit depth describes the number of bits used for each red, green, and blue value in your pixels. With more bits, you can properly encode a greater number of colors or shades of grey. If you don’t have enough bits, then there may be a visible jump from one value to the next (this rounding error is known as “quantization error”). Gradients (such as blue skies) require subtlety and where you will most likely see problems (banding).

When you use more bits, the data is more accurate but the file size is larger. So the ideal bit depth is the point where adding more does not improve the perceived quality of the image. There are a number of factors which may affect the requirements, but they all come down to how much you might stretch the data, as that makes the jumps bigger. So you may need more bits to protect for further editing, for HDR (which has a much wider range of possible luminance), or very wide gamuts (though not likely).

As a general rule, you need:

- Final content (for sharing, no further editing)

- 8 bits for SDR (“standard dynamic range”) content, though 10-bits can be useful in some rare cases with smooth gradients.

- 10 bits for HDR (“high dynamic range”) content, though 12-bits can be useful in some rare cases (and you can get away with 8-bits in many cases for images lacking gradients – I’ve seen some great JPGs encoded as a simple 8-bit image with no a gain map).

- Working files (when further editing may be required)

- 16-bits for SDR working files

- 32-bits for HDR working files (though you could get away with 16-bit in many cases)

For examples and more information, see “8, 12, 14 vs 16-bit depth: What do you really need?“.

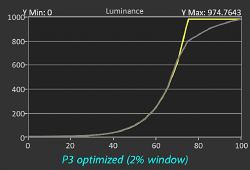

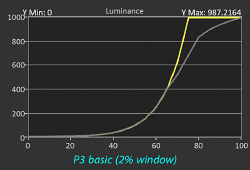

HDR Gain maps

Modern displays offer HDR (“high dynamic range”). Support is already the norm for Instagram and Threads, and in time will be widespread. When these images are viewed on less capable displays, they must be adapted. That can occur in one of two ways: automatically (tone mapping) or with your input as the artist (using a gain map). Exporting your HDR image with a gain map will significantly improve image quality on any display less capable than yours. Never share your image without a gain map unless you place a much higher priority on image size over image quality.

There is a lot of confusion around gain maps. They are often misperceived as some sort of “hack” to allow HDR support in JPG (which only supports 8-bits). It is true that they allow JPG to show HDR without banding (a JPG with a gain map is actually two 8-bit images, which is not the same as 16-bit quality – but it is much higher quality than just 8-bits and looks great for HDR). But the most important benefit of gain maps is that they allow proper adaptation of HDR to less capable displays, and this applies to any file format. Even higher bit depth formats like AVIF or JXL need a gain map to ensure high quality when sharing HDR.

Note that when saving HDR images in Adobe Lightroom / Camera RAW, the “maximize compatibility” is how you request a gain map. I believe this name is a little misleading, as the HDR images can still be viewed on SDR displays (they are compatible), but the quality is much lower. A more accurate name for this checkbox would be “ensure high quality on SDR / limited-HDR displays”, but that’s a mouthful.

Gain maps are well supported now for JPG, and there is limited support already for gain maps for AVIF, JXL, and TIF. Support is coming for most common formats which are capable of encoding a secondary image (including PNG – but I don’t believe webp is possible or likely).

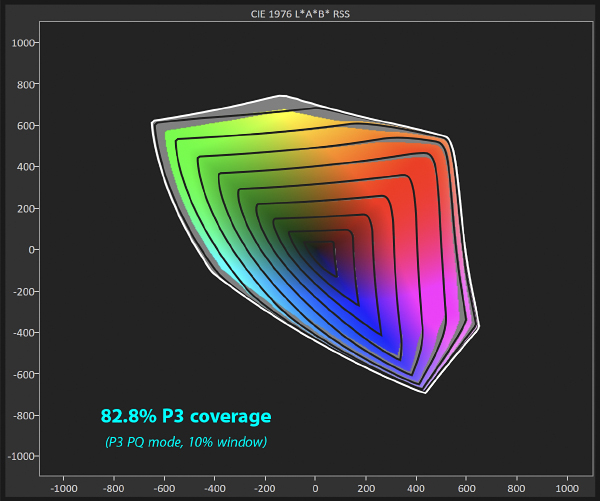

Color space (primaries, aka “gamut”)

Whereas bit depth affects the precision of color, the color space affects the range of color (ie “gamut”). These maximum red, green, and blue values are technically known as “primaries”. A wider gamut allows us to show more vibrant colors. The benefit of a wider gamut isn’t just being more colorful, it also helps to avoid clipping color gradients. For example, the details of a red rose petal may be lost without a wide gamut, or a colorful light shining on a wall may show a strange artifact where the color clips at the limit of the gamut.

There are many factors to consider in choosing a colorspace. Ideally, the gamut is wide enough to avoid clipping any color in your image. Colors found in nature would generally be contained within “Pointer’s gamut” (see here for comparisons to common colorspaces). ProPhoto includes all of Pointer’s gamut, but also many values outside the range of human vision. Rec2020 is an excellent match with Pointer’s gamut (technically missing a tiny bit blue, but you wouldn’t have a monitor supporting it). AdobeRGB does a decent job, but lacks some colors supported on monitors. P3 does a decent job, but lacks some colors supported by printers and by monitors which support more of the Rec2020 gamut.

sRGB is a very limited colorspace based on the limits of monitors from 30 years ago. However, it remains relevant for one annoying reason. Many websites strip the color information from your photo when you upload it (to save a few bytes). When this happens, anything other than sRGB encoding will show up as a desaturated image. This is unfortunate, as wide gamut is safe to use in all browsers and supported by most monitors. This practice of stripping color should hopefully go away as websites move to newer file formats like AVIF (which use a 4-byte CICP for color instead of 500-1000 bytes for an ICC profile in a JPG).

As a general rule:

- sRGB is always a safe choice and may be required to avoid desaturation on some web services.

- P3 (or Rec2020) is ideal for sharing higher quality images when you know the color profile will not be stripped (just check that it does not look desaturated after upload).

See “How to soft proof easily in Photoshop” for more on how clipping color gamut affects your image.

We tend to think of “color space” as just referring to the range of potential color (which are defined by the primaries). In reality, the color space includes other information such as the white point or transfer characteristics (often called “gamma”, but there are many other options which are not simple gamma curves, such as sRGB or PQ). You generally do not need to think about these as the best values are typically assumed or set for you. For example, when you choose sRGB, you get the sRGB primaries (same as rec709), the sRGB transfer function (somewhat different from rec709), and D65 for the white point. These factors are adjusted for you. For example, sRGB uses a D65 white point and a transfer function close to gamma 2.2, but ProPhoto uses D50 and gamma 1.8. But when you convert a flattened image from one to the other, the image will look identical (unless your ProPhoto image contains colors beyond the sRGB primaries, then that color will clip).

To be clear, the “gamma” here is encoding of the data and does not affect the display (which has it’s own “gamma”, and that definitely does matter). The only time the gamma encoding of the image would matter is if you used an inefficient gamma with low bit depth (for example, saving linear data at 8-bits would be a very poor choice). If you did have such a mismatch in the extremes, you might see banding (because not enough data would be allocated for the shadow values that we are more sensitive to).

And going down the rabbit hole here for those of you exploring HDR… gain maps are a totally different animal. Many people incorrectly assume that these images are encoded with PQ or HLG. That is almost never the case today because the base image in a JPG is encoded as SDR. It will gain a gain map which has either 1 or 3 gammas, as the map itself is not an image – it is just mathematical data to adjust from the base image. So a JPG gain map image could contain up to 4 different gamma values! When we start using gain maps in formats like AVIF that support 10-bit depth or more, we may see PQ or HLG used there (if the base image is encoded for HDR, which won’t be likely initially in order to ensure greatest compatibility until support is very widespread).

Compression

Compression is a measure of the file size required to achieve a given level of quality. An image that is identical to the original is considered lossless. An image which looks the same to a human would be known as “visually lossless”. And an image which is visibly inferior would be referred to as “lossy” compression. Any of those standards for quality may be appropriate, depending on your needs. Most photographers should aim for something which is visually lossless when viewed at 100%, or perhaps when zoomed in if that is important to you. True lossless encoding is rarely beneficial for encoding of an image to be shared on the web, and therefore should be avoided to optimize file size.

Progressive rendering

When viewing images on a slow connection, progressive rendering allows the viewer to see some preview before the image is fully downloaded. This is most important for viewing on mobile devices in areas with slow service or if you are sharing very high resolution images.

Depending on the file standard and encoding, you may see different rendering experiences:

- Sequential shows the image slowly revealed in full resolution from top to bottom. JPG may do this.

- Progressive shows a low resolution preview which increases in resolution as the data comes in.

- Progressive rendering in JPG may show as greyscale or false color initially and then show correct color

- JPEG XL will show proper color even at the lowest quality preview.

- No preview: nothing will show until the base image is ready to show in full resolution. AVIF does this.

- Note that gain maps will render the base image in the manner typically used for the file type, and then the gain map will be applied later when it is ready.

- So in a slow situation, a JPG gain map (which has an SDR base) may show progressive loading in SDR and then suddenly jump to HDR later (if the display supports HDR).

- An AVIF gain map with an SDR base would show the SDR base all at once when ready, and then could jump to HDR when the map is ready (if the display supports HDR).

- An AVIF gain map with an HDR base would show the HDR base all at once when ready. If the display does not support the full HDR, then automatic tone mapping would be used until the map was loaded and then the image quality would be improved using the map to derive the best results.

- JXL maps are not supported in any browser, but their superior progressive rendering would allow the best results.

- If you are concerned with progressive rendering with gain maps, the best solution today is to avoid excessively high resolution. Formats like AVIF are smaller and will help improve time to full render. In the future when we can share images encoded with an HDR base, that would allow the initial preview to render at the correct brightness (even if any required tone mapping might be sub-optimal until the map loads). If we could share JXL with an HDR base, that would be the ideal scenario as it would show a very usable preview almost right away.

See Theo’s demonstration of progressive vs sequential rendering.

Other key considerations

There are several other factors which may determine the best format to use:

- Support for the format. The best image format in the world is useless if your viewer can’t see it.

- Layers. Obviously, you’ll want support for saving your working files for non-destructive workflows. And of course you wouldn’t use these for the web as layers make the image much larger.

- Max pixel dimensions or file size. This isn’t much of an issue for images to be shared on the web or shown on a screen, but it definitely affects layered images and those prepared for print.

- Color sub-sampling (444 vs 422 vs 420).

- You generally won’t have a choice and don’t need to think about this (the defaults are typically great).

- If you’re curious, 444 means that the color data is full resolution (higher quality but larger files). As humans don’t see color in high resolution, sub-sampling is used to share color with neighboring pixels to reduce image size.

- Transparency. This doesn’t matter to most photographers, but it is helpful for showing products or other images where you’d like to see the background around the subject.

- Encoding speed/efficiency. This doesn’t matter for most photographers, but is a consideration for large web services converting millions of images.

- Decoding speed/efficiency. This isn’t a concern for most photographers, but may matter if you wish to share images with absurdly high resolution or wish to optimize for battery life on mobile devices.

- Animation. This isn’t a concern for most photographers, but you might use it in lieu of video in some niche application.

Wild cards: encoding, transcoding, decoding, and display

The topics on this page are clearly enormously complex and would be easy to get confused. Unfortunately there are other factors which are important to consider if you are troubleshooting or wish to make comparisons. It is important to know that each of the following can affect your experience:

- Encoding details.

- For example, Lightroom exports HDR AVIF at 10 bits and JXL at 16-bits.

- So these aren’t directly comparable at a given resolution, as the AVIF may be smaller and faster to export just because of the change in bit depth – but the JXL would be much more suitable for further editing.

- Transcoding.

- Transcoding is reprocessing of an image which occurs on most websites. It may be done to reduce file size (causing a loss of quality), reduce pixel dimensions, ensure the image is save from any security risks, etc.

- This is the cause of enormous confusion on the web, especially for uploading HDR gain maps. Your source image may be perfectly fine, but then it stops working after upload because the image on the web is NOT the same one you created.

- Decoding

- As you can see, there are not just multiple file types, but many very detailed variations within them. For example, there are four different ways to encode a gain map in just the JPG format – and I didn’t even mention that above. File format support is a very nuanced topic.

- Every piece of software you use may use a different decoder. Support in one piece of software or part of an operating system does not mean you’ll have the same result elsewhere. For example, MacOS Preview supports HDR gain maps, but Finder won’t show HDR thumbnails for gain maps (even though TIF and EXR will).

- Display

- Of course, even if the decoder knows how to properly process an image, different displays may show different results.

- The most common differences will be in gamut, HDR, and level of shadow detail.

- This is all unrelated to the file format and encoding, but it’s important to remember that it may affect your experience.

If you are testing to evaluate different options, the most important rules are:

- eliminate as much variability as possible. If you change more than one factor at a time (such as comparing 10-bit AVIF to 16-bit JXL), it’s very hard to draw meaningful conclusions.

- always test your results after uploading to a website, as transcoding may change the result.

- remember that others may have a significantly different display than you, this is particularly important for HDR.

Now that we’ve covered the key technologies, let’s take a look at the various file formats.

DNG

Your files may be in a camera-specific RAW format (NEF, CR2, ARW, etc) or in Adobe’s open format (DNG). I recommend you just leave your image in the RAW format offered by your camera. Select higher bit-depth if that’s an option, full resolution, and it would be ideal to use lossless compression (lossy is generally fine, uncompressed creates large files you can avoid).

Some would suggest using DNG to ensure your image will always be supported in the future. There is theoretical merit to this, but history has shown the formats remain well supported over time. I believe the risk is low, and you could convert files to DNG in the future if some update dropped support for legacy formats.

You may use the latest lossy DNG compression from Adobe to compress your images by 90%. The loss of quality here is so small that it would not show in a print. These are excellent images which are visually lossless. The potential risk here is that some future RAW software feature requires the original mosaic data. That was the case for some AI denoise, but support for the linear DNG formats seems to be expanding. If you don’t care about file size, you can skip this to keep your options open. But if you want a massive reduction in file size, this is a great option.

PSB / PSD / TIF

For your working files, it is critical to use a file format which supports layers. That means PSB (“Photoshop Big”), PSD (“Photoshop Document”), or TIF (“tagged image file format”). All three support the same image quality and features. The only difference is in maximum file size and support for the format.

Use PSB if possible. It is supported by Photoshop, Lightroom, Bridge, Affinity (importing), and Photomator, and more. The file size is effectively unlimited, and that is very helpful for exposure blending, 32-bit HDR, large prints, etc. You can resave the image as TIF if necessary (assuming it fits in the 4GB limit).

If you use software which does not support PSB, then TIF is a great option. It is limited to 4GB (which is much better than the 2GB limit of PSD).

JPG

The main benefit of JPG is that it is universally supported. It offers high quality standard images at a reasonable file size, but there are several better file formats we’ll get to below. Once those formats gain enough support, we will likely see JPG usage decrease significantly. It’s had a great 33 year run, but it will soon be time to starting replacing JPG.

JPG may support HDR by using a gain map. This makes it an ideal option for HDR today as it offers 100% compatibility. The viewer will always see a great result on any browser, and it adapts in an ideal way to the capabilities of the monitor. But compatibility is again the key benefit, and another format with gain map support will likely replace it in the future.

JPG has several limitations which are overcome by other file formats:

- Modest compression. Many newer file formats are smaller.

- Limited bit depth, putting the image at risk of banding (in rare cases) and making them unsuitable for further editing.

- No support for CICP encoding of color, which further increases file size and increases the risk that a web service will strip the profile and therefore make wide gamut color unusable.

- No support for transparency.

AVIF (“AV1 Image File Format”)

AVIF is the most promising next generation file format. It is already supported on all modern browsers.

It offers numerous benefits, including:

- Outstanding compression, often reducing file size by 30% compared to JPG.

- Up to 12-bit depth.

- Supported on the latest versions of all major browsers (for SDR)

- HDR (both natively at high bit depth and more importantly with gain maps to adapt optimally to any display)

- Transparency

- Animation

AVIF offers Lossless compression, but the files are rather large.

AVIF is reported to offer higher quality than JXL at extreme compression levels, but that has not been my experience with Adobe software. It might be true for other encoders.

Limitations of AVIF:

- Resolution is limited to 8K (for a single tile, up to 65,536 is possible but may show artifacts at edges). This is fine for the web, but could limit your print size to 14×26″.

- Maximum 12-bit depth, making it not suitable for further editing in many cases.

- No progressive rendering.

- Slow encode speeds / higher energy consumption.

Due to the time it takes for people to upgrade their browsers, real world support is already at 94%. All modern browsers support SDR rendering of AVIF and all browsers supporting HDR will show HDR AVIF. Additionally, AVIF with ISO gain map support is already supported by default in Chrome, Edge, Brave, Opera, Safari (macOS 26), and other Apple software. Taken together, that means that you should be able to share AVIF on the web in 2026. And if you’re willing to provide backup JPGs or wish to ignore a few people using very old browsers, you could start using it now for SDR image (wait for gain map support if you create HDR). AVIF is the key format to watch and should start to gain significant use soon.

Given outstanding image quality, small file sizes, and excellent support, AVIF is an excellent candidate to replace JPG. The JXL file format has some additional benefits, but a lack of support makes AVIF a much more viable option for the foreseeable future.

JXL (JPEG XL)

JPEG XL (file extension “JXL”) is a next generation file format. Don’t let the name fool you, JXL is not a JPG and compatibility is very low (which is the key reason it isn’t used much currently).

It supports numerous benefits, including:

- Outstanding compression, often reducing file size by 30% compared to JPG.

- Lossless compression.

- Up to 32-bit depth (or as low as 8, depending on your needs).

- HDR (both natively at high bit depth and more importantly with gain maps to adapt optimally to any display)

- Better progressive rendering – a low-res preview can show almost immediately (where as JPG loads sequentially and AVIF won’t show anything until the full base image is downloaded)

- Faster encoding than AVIF

- Existing JPGs may be converted to JXL with no further loss of image quality (making this ideal for migrating existing web content)

- Unlimited file size – supports over 1 billion pixels in each dimension

- Even fewer artifacts than AVIF (though AVIF is outstanding)

- Transparency

- Animation

If JXL were widely supported, this would be my file format of choice. It offers a better experience on slow internet connections (progressive rendering), has faster encoding speeds, better decode speed / power efficiency, and is a much better choice for sending flat images for printing or further editing (due to higher bit depth and unlimited dimensions).

However, browser support is currently very limited. That would likely change if Chrome would offer support, but it would take some time for upgrades even if all browsers supported it. So even if Google changes its policy now, AVIF is the most promising candidate to replace JPG in the near term.

JXL is the basis of Adobe’s new lossy compression, so you may already be using it without realizing it. Aside from that, JXL is an ideal format for sharing high quality images with other creatives for further editing. It’s also an ideal file format to send to your print lab, as the file size is much smaller than TIF and the quality is much higher than JPG.

HEIF

The key benefits of HEIF:

- Outstanding compression, often reducing file size by 30% compared to JPG.

- Up to 16-bit depth (though many decoders only support 8)

The future of HEIF is unclear, and there appears to be little momentum for other browsers to add support. It’s well supported by Apple, but Apple devices support AVIF (including with gain maps) and JXL. So if you are exporting images for the web or for Apple devices, AVIF is a better choice. The only place where photographers should consider using HEIF currently is when capturing images on an Apple device (as the quality is higher than JPG), but any subsequent exports of those images would ideally be done as AVIF.

WebP

The webp file format was created in 2010. It has some good uses as a JPG alternative, but has seen limited use as the benefits have not been compelling enough.

The key benefits of webp:

- Better compression than JPG (though not as good as AVIF, JXL, and HEIF)

- Transparency

- Animation

Further growth of WebP seems very unlikely. It has several deficiencies compared to other newer file formats.

The key limitations of webp are:

- Limited to 8-bits

- No support for HDR (no gain maps)

- Support is not universal due to older browsers (96%)

- Compression and quality are lower than AVIF / JXL

PNG

The main benefit of PNG is broad support in a format capable of supporting both transparency and lossless encoding. Transparency is the key benefit for the web, and lossless encoding is mostly important for archiving (especially for governments and museums, which may not yet support next generation formats). A gain map spec for PNGs is coming, but this is likely only to be to used for archival work (and perhaps not even that by the time it is ready). Like JPG, PNG should soon be replaced by better formats on the web.

.

.